Background: I ve been relearning Rust (more about that in a separate post, some time later), and in doing so, I chose to implement the low-level parts of git (I ll touch the why in that separate post I just promised).

Disclaimer: It s friday. This is not entirely(?) a serious post.

So, I was looking at

Documentation/technical/index-format.txt, and saw:

32-bit number of index entries.

What? The index/staging area can t handle more than ~4.3 billion files?

There I was, writing Rust code to write out the index.

try!(out.write_u32::<NetworkOrder>(self.entries.len()));

(For people familiar with the

byteorder crate and wondering what NetworkOrder is, I have a

use byteorder::BigEndian as NetworkOrder)

And the Rust compiler rightfully barfed:

error: mismatched types:

expected u32 ,

found usize [E0308]

And there I was, wondering: mmmm should I just add

as u32 and silently truncate or hey what does git do?

And it turns out, git

uses an unsigned int to track the number of entries in the first place, so there is no truncation happening.

Then I thought but what happens when

cache_nr reaches the max?

Well, it turns out there s only one obvious place where

the field is incremented.

What? Holy coffin nails, Batman! No overflow check?

Wait a second, look 3 lines above that:

ALLOC_GROW(istate->cache, istate->cache_nr + 1, istate->cache_alloc);

Yeah, obviously, if you re incrementing

cache_nr, you already have that many entries in memory. So, how big would that array be?

struct cache_entry **cache;

So it s an array of pointers, assuming 64-bits pointers, that s ~34.3 GB. But, all those

cache_nr entries are in memory too. How big is a cache entry?

struct cache_entry

struct hashmap_entry ent;

struct stat_data ce_stat_data;

unsigned int ce_mode;

unsigned int ce_flags;

unsigned int ce_namelen;

unsigned int index; /* for link extension */

unsigned char sha1[20];

char name[FLEX_ARRAY]; /* more */

;

So, 4 ints, 20 bytes, and as many bytes as necessary to hold a path. And two inline structs. How big are they?

struct hashmap_entry

struct hashmap_entry *next;

unsigned int hash;

;

struct stat_data

struct cache_time sd_ctime;

struct cache_time sd_mtime;

unsigned int sd_dev;

unsigned int sd_ino;

unsigned int sd_uid;

unsigned int sd_gid;

unsigned int sd_size;

;

Woohoo, nested structs.

struct cache_time

uint32_t sec;

uint32_t nsec;

;

So all in all, we re looking at 1 + 2 + 2 + 5 + 4 32-bit integers, 1 64-bits pointer, 2 32-bits padding, 20 bytes of sha1, for a total of 92 bytes, not counting the variable size for file paths.

The average path length in

mozilla-central, which only has slightly over 140 thousands of them, is 59 (including the terminal NUL character).

Let s conservatively assume our crazy repository would have the same average, making the average cache entry 151 bytes.

But memory allocators usually allocate more than requested. In this particular case, with the default allocator on GNU/Linux, it s

156 (weirdly enough, it s 152 on my machine).

156 times 4.3 billion 670 GB. Plus the 34.3 from the array of pointers: 704.3 GB. Of RAM. Not counting the memory allocator overhead of handling that. Or all the other things git might have in memory as well (which apparently involves a hashmap, too, but I won t look at that, I promise).

I

think one would have run out of memory before hitting that integer overflow.

Interestingly, looking at

Documentation/technical/index-format.txt again, the on-disk format appears smaller, with 62 bytes per file instead of 92, so the corresponding index file would be smaller. (And in version 4, paths are prefix-compressed, so paths would be smaller too).

But having an index

that large supposes those files are checked out. So let s say I have an empty ext4 file system as large as possible (which I m told is 2^60 bytes (1.15

billion gigabytes)). Creating a small empty ext4 tells me at least 10 inodes are allocated by default. I seem to remember there s at least one reserved for the journal, there s the top-level directory, and there s

lost+found ; there apparently are more. Obviously, on that very large file system, We d have a git repository.

git init with an empty template creates 9 files and directories, so that s 19 more inodes taken. But

git init doesn t create an index, and doesn t have any objects. We d thus have at least one file for our hundreds of gigabyte index, and at least 2 who-knows-how-big files for the objects (a pack and its index). How many inodes does that leave us with?

The Linux kernel source tells us the number of inodes in an ext4 file system is stored

in a 32-bits integer.

So all in all, if we had an empty very large file system, we d

only be able to store, at best, 2^32 22 files And we wouldn t even be able to get

cache_nr to overflow.

while following the rules. Because the index can keep files that have been removed, it is actually possible to fill the index without filling the file system. After hours (days? months? years? decades?*) of running

seq 0 4294967296 while read i; do touch $i; git update-index --add $i; rm $i; done

One should be able to reach the integer overflow. But that d still require hundreds of gigabytes of disk space

and even more RAM.

- At the rate it was possible to add files to the index when I tried (yeah, I tried), for a few minutes, and assuming a constant rate, the estimate is close to 2 years. But the time spent reading and writing the index file increases linearly with its size, so the longer it d run, the longer it d take.

Ok, it s actually much faster to do it hundreds of thousand files at a time, with something like:

seq 0 100000 4294967296 while read i; do j=$(seq $i $(($i + 99999))); touch $j; git update-index --add $j; rm $j; done

At the rate the first million files were added, still assuming a constant rate, it would take about a month on my machine. Considering reading/writing a list of a million files is a thousand times faster than reading a list of a billion files, assuming linear increase, we re still talking about decades, and plentiful RAM. Fun fact: after leaving it run for 5 times as much as it had run for the first million files, it hasn t even done half more

One could generate the necessary hundreds-of-gigabytes index manually, that wouldn t be too hard, and assuming it could be done at about 1 GB/s on a good machine with a good SSD, we d be able to craft a close-to-explosion index within a few minutes. But we d still lack the RAM to load it.

So, here is the open question: should I report that integer overflow?

Wow, that was some serious procrastination.

Edit: Epilogue: Actually, oops, there is a separate integer overflow on the

reading side that can trigger a buffer overflow, that doesn t actually require a large index, just a crafted header, demonstrating that yes, not all integer overflows are equal.

Weblate is growing quite well in last months, but sometimes it's development is really driven by people who complain instead of following some roadmap with higher goals. I think it's time to change it at least a little bit. In order to get broader feedback I've sent out short survey to active project owners in Hosted Weblate week ago.

I've decided to target at smaller audience for now, though publicly open survey might follow later (but it's always harder to evaluate feedback across different user groups).

Overall feelings were really positive, most people find Weblate better than other similar services they have used. This is really something I like to hear :-).

Weblate is growing quite well in last months, but sometimes it's development is really driven by people who complain instead of following some roadmap with higher goals. I think it's time to change it at least a little bit. In order to get broader feedback I've sent out short survey to active project owners in Hosted Weblate week ago.

I've decided to target at smaller audience for now, though publicly open survey might follow later (but it's always harder to evaluate feedback across different user groups).

Overall feelings were really positive, most people find Weblate better than other similar services they have used. This is really something I like to hear :-).

But the most important part for me was where users want to see improvements. This somehow matches my expectation that we really should improve the user interface.

But the most important part for me was where users want to see improvements. This somehow matches my expectation that we really should improve the user interface.

We have quite a lot features, which are really hidden in the user interface. Also interface for some of the features is far from being intuitive. This all probably comes from the fact that we really don't have anybody experienced with creating user interfaces right now. It's time to find somebody who will help us. In case you are able to help or know somebody who might be interested in helping, please get in touch. Weblate is free software, but this can still be paid job.

Last part of the survey was focused on some particular features, but the outcome was not as clear as I hoped for as almost all feature group attracted about same attention (with one exception being extending the API, which was not really wanted by most of the users).

Overall I think doing some survey like this is useful and I will certainly repeat it (probably yearly or so), to see where we're moving and what our users want. Having feedback from users is important for every project and this seemed to worked quite well. Anyway if you have further feedback, don't hesitate to use our issue tracker at GitHub or contact me directly.

We have quite a lot features, which are really hidden in the user interface. Also interface for some of the features is far from being intuitive. This all probably comes from the fact that we really don't have anybody experienced with creating user interfaces right now. It's time to find somebody who will help us. In case you are able to help or know somebody who might be interested in helping, please get in touch. Weblate is free software, but this can still be paid job.

Last part of the survey was focused on some particular features, but the outcome was not as clear as I hoped for as almost all feature group attracted about same attention (with one exception being extending the API, which was not really wanted by most of the users).

Overall I think doing some survey like this is useful and I will certainly repeat it (probably yearly or so), to see where we're moving and what our users want. Having feedback from users is important for every project and this seemed to worked quite well. Anyway if you have further feedback, don't hesitate to use our issue tracker at GitHub or contact me directly.

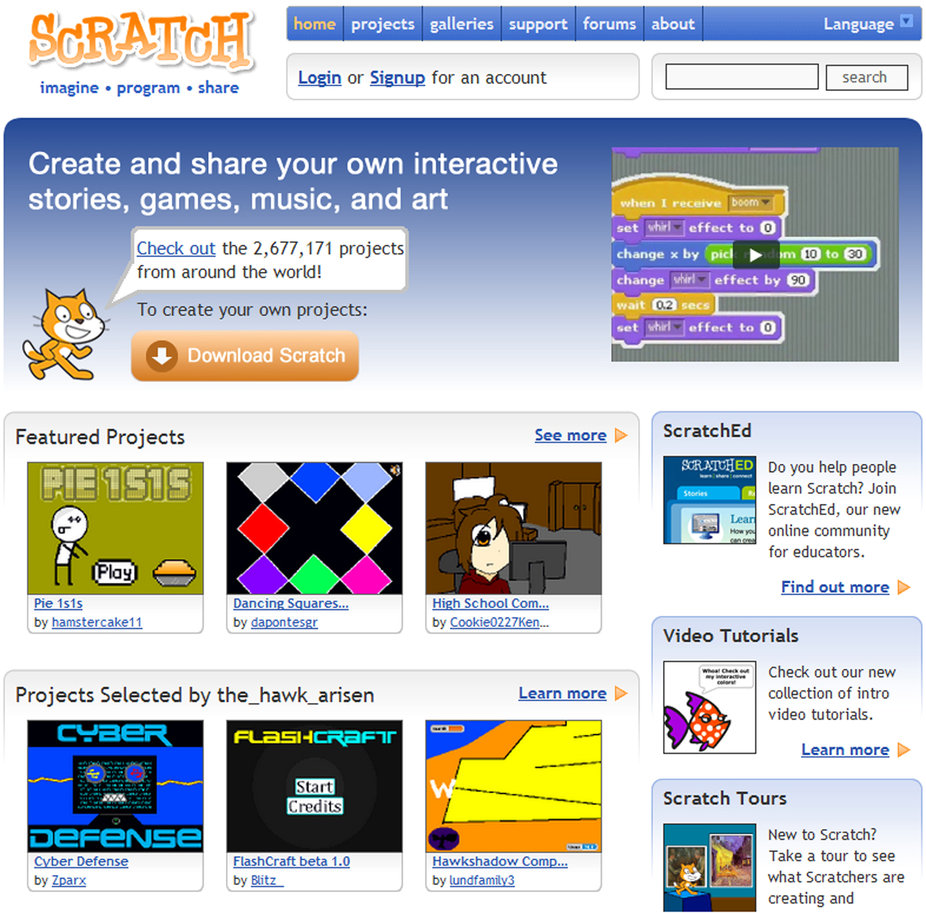

Scratch is a

Scratch is a

Project metadata

Project metadata User metadata

User metadata Site-wide statistics

Site-wide statistics Simple demonstration of Scratch Community Blocks

Simple demonstration of Scratch Community Blocks Doughnut visualization

Doughnut visualization Ice-cream visualization

Ice-cream visualization Data-driven doll dress up

Data-driven doll dress up As web search engines and IRC seems to be of no help, maybe someone

here has a helpful idea. I have some service written in python that

comes with a .service file for systemd. I now want to build&install a

working service file from the software's setup.py. I can override

the build/build_py commands of setuptools, however that way I still

lack knowledge wrt. the bindir/prefix where my service script will be

installed.

Solution

Turns out, if you override the

As web search engines and IRC seems to be of no help, maybe someone

here has a helpful idea. I have some service written in python that

comes with a .service file for systemd. I now want to build&install a

working service file from the software's setup.py. I can override

the build/build_py commands of setuptools, however that way I still

lack knowledge wrt. the bindir/prefix where my service script will be

installed.

Solution

Turns out, if you override the